Introduction:

In today's highly dynamic web environment, ensuring a smooth and consistent user experience is paramount. As websites and applications scale to handle increased traffic and distribute workload across multiple servers, maintaining session persistence becomes a crucial aspect. This is where sticky sessions come into play. In this blog post, we will delve into the concept of sticky sessions and explore various strategies to achieve them effectively.

What are Sticky Sessions?

Sticky sessions, also known as session affinity or session persistence, refer to the practice of directing subsequent requests from a user's session to the same backend server throughout their interaction with a web application. Rather than randomly distributing requests across multiple servers, sticky sessions enable a user to maintain their session state on a specific server. This approach is especially important for applications that store user-specific data or rely on session-based functionalities.

Benefits of Sticky Sessions:

1. Session Persistence: Sticky sessions ensure that user-specific data remains consistent and accessible across multiple requests, as the user's session is tied to a specific server. This is particularly useful for applications that rely on session variables or personalized content.

2. Enhanced User Experience: By maintaining the session state on a specific server, sticky sessions reduce the chances of users experiencing disruptions or inconsistencies during their interaction with an application. This leads to a smoother and more seamless user experience.

3. Load Balancing Flexibility: Sticky sessions provide flexibility in load balancing by allowing administrators to allocate resources and manage server capacity more efficiently. It enables fine-tuning of load distribution based on server capabilities and workload requirements.

Strategies to Achieve Sticky Sessions:

1. IP-Based Affinity:

- Assign users to a specific backend server based on their IP address.

- Configure load balancers to direct requests from the same IP address to the same server.

- This method is relatively simple to implement and is effective unless users are behind a proxy or NAT (Network Address Translation).

2. Cookie-Based Affinity:

- Generate a session identifier and store it as a cookie on the user's browser.

- Configure load balancers to examine the session identifier and route subsequent requests to the corresponding server.

- This approach allows for more flexibility as cookies can be used to identify sessions across different IP addresses.

3. URL Rewriting:

- Append a session identifier or token to the URLs within an application.

- Configure load balancers to examine the token and route requests accordingly.

- This method is suitable when using URL rewriting is feasible and doesn't conflict with application logic.

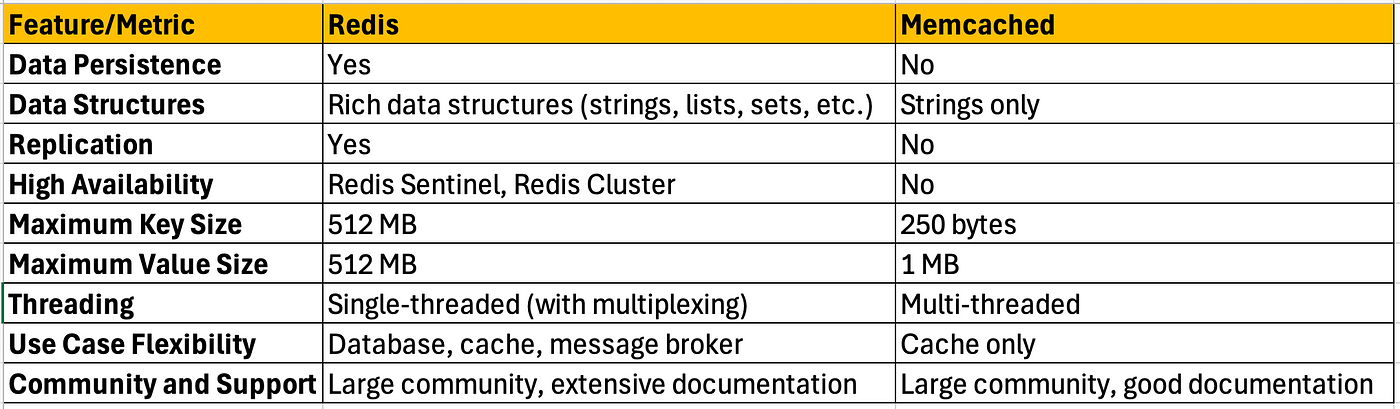

4. Session Database:

- Maintain a shared session database accessible to all backend servers.

- Store session data in the database and associate it with a unique session identifier.

- Load balancers can then route requests based on the session identifier, ensuring session continuity across servers.

- This method is particularly useful in distributed environments or when using cloud-based solutions.

Conclusion:

Sticky sessions play a crucial role in maintaining session persistence, enhancing user experience, and optimizing server resource allocation. By implementing the appropriate strategy, whether it's IP-based affinity, cookie-based affinity, URL rewriting, or session databases, web applications can ensure consistent session management and scalability. As web environments continue to evolve, mastering the art of achieving sticky sessions is essential for delivering high-performance and user-friendly applications.